Announced less than 24 hours ago at the Warsaw MUM, comes the first (and hopefully not the last) MikroTik shot at high end routing.

Update 2012-07-16: Tilera has made a press release confirming their processors will be used in the CCR-1036. You can read the full thing here.

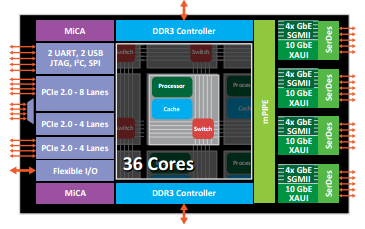

Mikrotik CLOUD CORE Router CCR-1036

- 36 core networking CPU (1.2Ghz per core)

- New 64bit processor – assuming this one

- New Future models will support 10Gig SFP+ configurations

- 12 Mbytes total on-chip cache

- High speed encryption engine

- 4 x SFP ports

- 12 x Gigabit Ethernet ports

- Colour Touchscreen LCD

- 1U Rackmount case

- 16 Gigabit throughput

- 15 Million+ Packets Per Second on Fast-Path

- 8 Million+ Packets Per Second on Standard-Path

- All ports directly connected to CPU (We assuming this means no switch chips will be present)

The release date is said to be sometime this summer however given previous releases the authors opinion is to take this with a grain of salt. A redundant PSU version is also said to be planned for those requiring higher reliability given the high performance/throughput of the device. The router is suspected to be based of the TILE-Gx8036 processor, a 36 core beast built for networking applications.

Here’s Gregs take on it all: http://gregsowell.com/?p=3625

My Opinions (Andrew Cox / Omega-00)

While I’m super excited about the prospect of something that’s able to handle routing at wirespeed + likely a bunch of firewall, filter and QoS settings; I’m also a little concerned about how the CPU loading will take place and if there will be any additional failsafes put in place to make this product as reliable as it needs to be.

Given we’re still at a place where we can’t get support and maintenance contracts from MikroTik, the platform needs to be as stable as a rock and while I find this is pretty much the case with all basic features there’s still some overlooked issues that pop up over time with specific features causing memory leaks and the like.

At present I’ve taken a liking to running systems either with:

a) a remote access card allowing direct console input and the ability to power cycle the router independently of it being responsive.

b) ESXi as the base OS and RouterOS running on top of this to allow an extra layer of protection and management (also gives the ability to backup and restore in the event a version upgrade goes bad) c) Dual boot loader, allowing fallback to a previous working version in the event of some sort of bootup failure. My guesstimate on pricing: $1500-$1800USD

My Opinions (Andrew Thrift):

This is a move in the right direction for Mikrotik. The Cloud Core product line will provide a viable alternative to the Juniper MX5 and Cisco ASR-900x series of routers for ethernet based enterprise and small ISP networks. It will also provide users with a Mikrotik supported platform that can provide over 10gigabit of throughput, where previously they were forced to use a 3rd party x86 server.

Based on the information released so far, this product appears to be:

– Using the new Tilera GX8036 processor

– Using the 6windgate software a replacement for the Linux networking stack Confirmed false by 6windgate.

These will allow Mikrotik the following features

Edit: While 6windgate software is not being used for this, it is likely we may see some of these features regardless from MikroTik direclty.

– Allocation of Tiles to different functions e.g. 1st tile can be used for “Control” while next 6 tiles are used for packet processing

– Fast Path packet processing, on the first pass packets are inspected (slow path), while subsequent flows do not need to be inspected so do not reach the CPU. This will boost raw throughput, and will integrate with Queue Trees, allowing for very efficient traffic shaping systems.

– Hardware based “virtualisation” – Multiple instances of RouterOS will be able to run on a group of Tiles at native speed, no hypervisor required. This allows for native performance as there is no hypervisor.

A design change with the new Cloud Core Routers, Mikrotik look to have FINALLY moved to using a standard metal casing with a printed plastic sticker with cutouts for the connectors. I hope this is adopted across the RB2011 line, it makes the products look far more professional, and will of course lower manufacturing costs due to not needing to retool for different model variations.

In the future I hope to see a modular Cloud Core Router product that can take two PSU’s, either AC or DC and has flexible module bays, with options such as 2x SFP+, 8x SFP, 8xRJ45 this will allow providers to build resilient MPLS networks on modern high speed links, find use in the modern data centre, and allow use for Metro Ethernet applications.